Enterprises today rely on data-driven insights at speed, making reliable and high-quality data essential to gain competitive advantage. It is here that the data engineering teams must follow data engineering best practices to play a pivotal role, be responsible for building robust infrastructures, execute jobs, and address diverse requests from analytics and BI teams. As such, data engineers must consider a comprehensive set of dependencies and requirements when designing and constructing data pipelines to ensure seamless data availability and delivery.

With the added challenges around data governance, privacy, security, and data quality, it is important for data engineering teams to navigate, design, and implement data platforms and follow battle-tested best data engineering practices to ensure success.

Through this blog post, we’ll shed light on key data engineering best practices to streamline your work and deliver faster insights.

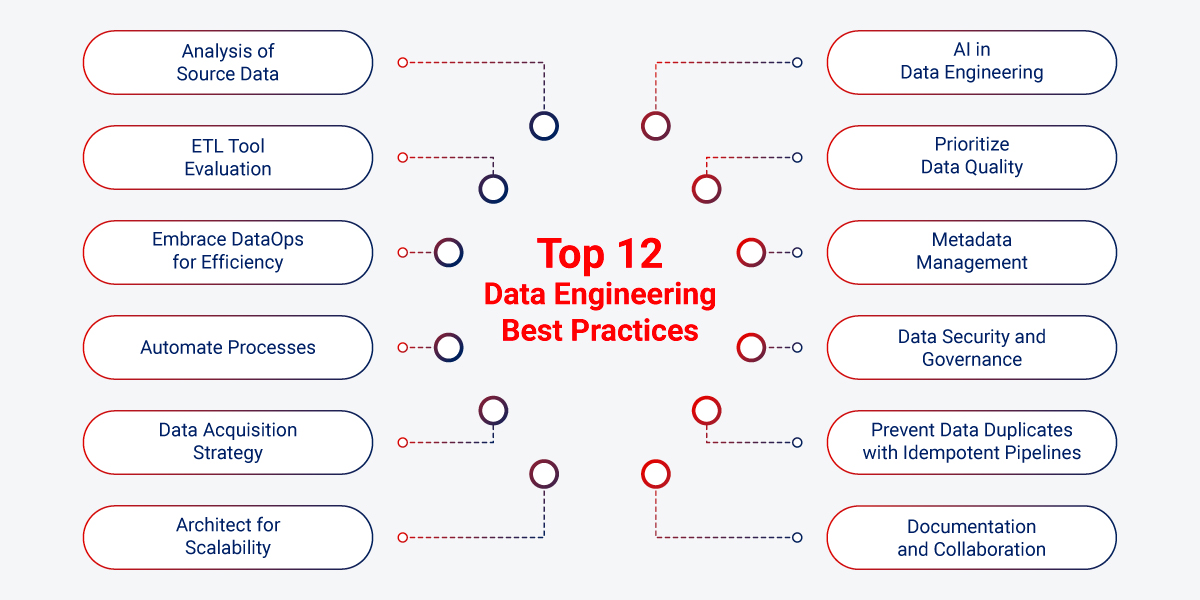

Top 12 Data Engineering Best Practices

Let’s explore some of the best practices in data engineering to help you build clean, usable, and reliable data pipelines, accelerate the pace of development, improve code maintenance, and make working with data easy. This will eventually enable you to prioritize actions and move your data analytics initiatives more quickly and efficiently.

#1 Analysis of Source Data

Analyzing source data and understanding the nature helps reveal potential errors and inconsistencies early on, before they permeate the data pipeline. It is a proactive approach to safeguard the integrity of your data ecosystem and ensures that your data pipelines are built on strong foundations.

- Assess Data Needs & Business Goals: Gain a clear understanding of how you would approach big data analytics at the very outset. Plan what type of data you will collect, where and how it will be stored, and who will analyze it.

- Collect & Centralize Data: Once you have a clear understanding of your data needs, you need to extract all structured, semi-structured, and unstructured data from your vital business applications and systems. Transfer this data to a data lake or data warehouse, where you’ll implement an ELT or ETL process.

- Perform Data Modeling: For analysis, data needs to be centralized in a unified data store. But before transferring your business information to the warehouse, you may want to consider a data model. This process will help you determine how the information is related & how it flows together.

- Interpret Insights: You can use different analytical methods to uncover practical insights from business information. You can analyze historical data, track key processes in real-time, monitor business performance and predict future outcomes.

#2 ETL Tool Evaluation

ETL tools streamline data movement from various sources to target locations, providing the insights your finance, customer service, sales, and marketing teams need for informed decision-making. Selecting the right tool is crucial for maximizing efficiencies and matching your specific requirements. Consider these important criteria when evaluating ETL tools as per your business needs:

- Pre-built Connectors and Integrations

- Ease of Use

- Pricing

- Scalability and Performance

- Customer Support

- Security and Compliance

- Batch Processing or Real-Time Processing

- ETL (Extract, Transform, Load) or ELT (Extract, Load, Transform)

#3 Embrace DataOps for Efficiency

DataOps can accelerate data delivery, reduce errors, and enhance overall productivity by improving team communication and collaboration.

- Collaboration: From managing complex data lifecycles to enabling collaboration between data engineers, analysts, and operations teams, DataOps serves as a framework to enable efficient teamwork across the data pipeline.

- Data Quality: It involves continuous integration and continuous deployment (CI/CD) for data pipelines, automated testing, and ensuring data quality at every stage.

As one of the best practices for data engineering, it uncovers patterns, trends, and crucial insights for better business decision-making and facilitates more agile and responsive data pipeline development.

#4 Automate Processes

The goal is to minimize manual intervention and delays where possible. Evaluate processes that can be scheduled, triggered, or orchestrated based on events. Automated systems scale better and reduce the overhead of managing everything manually. Strike a balance between automation and allowing certain business users flexibility.

- Build workflows to automate end-to-end data integration from source systems to target data stores. Use workflow schedulers like Apache Airflow that allow easy configuration of tasks and dependencies.

- Implement automated alerts and monitoring for Service Level Agreements (SLAs), KPIs, and data validation checks. Get notified before issues impact downstream processes.

- Set up an automated testing framework for data quality, ETL logic, error handling, etc. Run tests as part of CI/CD pipelines.

#5 Data Acquisition Strategy

Data acquisition is a critical best practice in data engineering that involves discovering and integrating valuable external data into your system. The key is to identify the specific insight you need from this information and how it will be used. Smart planning ensures you don’t waste time and resources on irrelevant data.

- One-click Ingestion: This method efficiently moves all existing data to a target system. A steady stream of accessible data is crucial for analytics and downstream reporting tools. One-click ingestion allows you to ingest data, available in various formats into an existing Azure Data Explorer table and create mapping structures as needed.

- Incremental Ingestion: The incremental extract pattern allows you to extract only changed data from your source tables/views/queries. It reduces the load on your source systems and optimizes overall ETL processes. To choose the right incremental ingestion type that meets your need, consider your source data’s format, volume, velocity, and access criteria.

Errors during data ingestion have a cascading negative effect on every subsequent process. Inaccurate data results in flawed reports, spurious analytics, and poor decision-making. Therefore, a well-defined data acquisition strategy is essential for organizations to effectively collect the right data, save resources, and ensure the quality and consistency of the foundation for data-driven insights.

#6 Architect for Scalability

Scalability is critical in data engineering as data volumes and processing needs continuously grow. This best practice for data engineering involves designing flexible and elastic data infrastructure that can handle increasing workloads without sacrificing performance or availability.

- Plan infrastructure that can easily scale up or down to meet changing data processing needs. Use cloud-based solutions like AWS, Azure, GCP that provide auto-scaling capabilities.

- Design distributed systems capable of handling large volumes of data ingestion, processing, and storage. Leverage technologies like Hadoop, Spark, Kafka, etc.

- Modularize components so that bottlenecks can be independently identified and scaled.

#7 AI in Data Engineering

Leveraging AI can significantly improve various aspects of the data lifecycle. From automating data processing tasks and optimizing data pipelines to delivering advanced analytics, integrating AI helps in several ways. It helps in real-time data processing by enabling faster decision-making and automating data analysis as it arrives. Integrating AI tools automate tasks, enhance data quality, and streamline workflows. Modern tools like Apache Spark MLlib, TensorFlow Extended (TFX), and Kubeflow enable seamless integration of AI capabilities into existing data infrastructure. Overall, it assists data engineers in improving the speed, efficiency, and scalability of data processes.

#8 Prioritize Data Quality

Data quality directly impacts critical business functions like lead generation, sales forecasting, and customer analytics. Data engineering teams must, therefore, prioritize data quality.

- Make Data Validation a Core Habit: Integrate comprehensive validation rules and checks at every stage of your process – ingestion, processing, and serving.

- Automated Quality Monitoring: Manually inspecting data quality can be tedious and error-prone. Implement automated tracking of critical metrics like missing data, anomalies, and schema drifts. Set up smart alerts to proactively address issues before they escalate.

- Mastering Data Lineage: Understand the complete journey of your enterprise data – from its origin to all transformations and final destinations. Maintain a thorough lineage and audit trail for efficient troubleshooting. When problems arise, this lineage is your secret weapon for quick root cause analysis.

#9 Metadata Management

Metadata provides the context and background information about your data assets – datasets, pipelines, models, and more. In today’s complex data landscapes, effective metadata management is essential for data engineers.

Think of metadata as your map for navigating your entire data ecosystem effectively. It allows you to understand your data:

- Where your data came from

- How it’s been transformed

- Who owns it

- And how reliable it is

Without up-to-date and accurate metadata, you’re essentially flying blind. A centralized metadata repository acts as a single source of truth, making it easy to search and access this critical information across the organization. Larger companies often invest in dedicated metadata catalogs with advanced capabilities, such as data lineage tracking and collaboration tools.

But metadata management isn’t just about creating a fancy repository. It’s about building a culture where consistent documentation and updating of metadata are integral parts of your evolving data pipelines and development workflows, not an afterthought.

Implementing a robust metadata management strategy as a prominent data engineering best practice can unlock the true value of your data assets, foster collaboration, and establish a solid foundation for data-driven decision-making across the organization.

#10 Data Security and Governance

Prioritize data security, comprehensive documentation, and clean maintainable code to create resilient and sustainable data pipelines. These practices safeguard your organization’s valuable data assets and facilitate collaboration, knowledge sharing, and the ability to adapt to changing requirements over time.

- Implement robust data security measures, such as encryption, access controls, and auditing.

- Establish data governance policies and procedures to ensure regulatory compliance and data privacy.

- Maintain data retention and archiving policies to manage data lifecycle and storage costs.

#11 Prevent Data Duplicates with Idempotent Pipelines

Implement idempotent data pipelines to ensure that repeated operations produce consistent results without creating duplicates or unintended side effects. Idempotency is crucial for handling pipeline failures, retries, and concurrent operations reliably. Common implementation strategies include:

- To overcome this, organizations can use auto-generated unique identifiers (ID) or globally unique identifiers (UUIDs) for every piece of data. Through this, the pipeline can detect and prevent inserting the same record multiple times

- Maintaining execution logs or checkpoints to track processed data

- Designing atomic transactions for complex transformations

Implementing this best practice for data engineering helps make the pipeline resilient to failures and redundancies.

#12 Documentation and Collaboration

- Create and Maintain Documentation: Keep detailed documentation covering data pipeline architecture, data models, operation procedures to streamline maintenance and ease the onboarding process for new team members

- Foster Collaborate Across Teams: Work closely with data scientists and analysts to understand their requirements, ensuring that data systems effectively meet their needs. It is a recommended best practice for data engineering to establish clear communication channels and standardized processes for requirement gathering and feedback

Why is Rishabh Software the Ideal Choice for Your Data Engineering Needs?

Rishabh’s data engineering services can help your business advance to the next level of data usage, data management and data automation by building efficient data pipelines that modernize platforms and enable rapid AI adoption. Our expert team of data engineers leverages industry best practices and advanced analytics solutions to help organize and manage your data better, generate faster insights, build predictive systems so you extract the highest ROI from your data investments.

We help organizations to advance to the next level of data usage by providing data discovery & maturity assessment, data quality checks & standardization, cloud-based solutions for large volumes of information, batch data processing (with optimization of the database), data warehouse platforms and more. We help develop data architecture by integrating new & existing data sources to create more effective data lakes. Further, we can also incorporate ETL pipelines, data warehouses, BI tools & governance processes.

Frequently Asked Questions

Q: What is data engineering and some of its main components?

A: It’s the practice of designing, building, and managing data pipelines that ingest, transform, and store data for analytical use cases. The main components of data engineering include:

- Data Ingestion: Getting data from various sources like databases, APIs, files, streams into the pipeline. Two popular big data tools are Apache Kafka and Apache NiFi.

- Data Processing: Transforming and cleansing data for analysis. This includes steps like parsing, standardization, deduplication etc. Processing engines like Spark and Flink are commonly used.

- Data Storage: Persisting processed data in storage optimized for analytics like data warehouses, data lakes and databases. Examples are Snowflake, Amazon Redshift, and Amazon S3.

- Workflow Orchestration: Managing execution of pipelines end-to-end using workflow tools like Airflow, Azure Data Factory.

- Infrastructure Management: Provisioning and managing IT infrastructure like servers, networks, databases used in the data stack.

- Monitoring: Tracking pipelines to ensure successful runs, SLA adherence and data quality. Involves logs, alerts, dashboards.

Q: What are Data pipelines?

A: It is a series of processing steps that combine and organize the end-to-end steps for ingesting data from source systems, processing and transforming it, and making it available for consumption through analytics applications. The key aspects of data pipelines are:

- Automated: Workflows are scheduled or triggered automatically without manual intervention.

- Modular: Different processing steps are compartmentalized into discrete stages or tasks.

- Scalable: Pipeline workflows can handle growing data volumes by scaling compute resources.

- Reliable: They incorporate features for retries, error handling, alerting to maintain continuity of operations.

- Reproducible: Changes to pipelines are version controlled and allow re-running previous instances.

- Monitored: Logs, metrics, and dashboards provide observability into pipeline runs and data flow.

Data pipelines enable moving vast amounts of data efficiently through various processes to make them analytics ready.

Q: What is data warehousing and how is it used in data engineering?

A: It’s a central repository built for analysis and reporting. It integrates data from multiple sources into a single store optimized for analytical querying. In data engineering, data warehouses are used for:

- Structuring and organizing data from diverse sources into analysis-friendly schemas like star or snowflake schema.

- Allowing analysts to query the processed, integrated data using SQL which is simpler than coding transformations.

- Providing high query performance on large datasets by using columnar storage, materialized aggregates, partitioning.

- Enabling historical analysis by persisting and versioning the transformed data for use across time periods.

- Facilitating data visualization, dashboards, and reporting for business users using BI tools that run on top of data warehouses.

Robust data warehousing is a critical backbone of analytics and thereby drives major data engineering efforts.

30 Min

30 Min