We all know and have experienced the power of Gen AI so far. From producing lifelike content with ChatGPT to visual creativity through diffusion models like Dall-E, Generative AI can do all these and more.

But let’s be honest, building a generative AI solution can be tricky. It’s exciting, yes, but numerous challenges, tough decisions, uncertainties, and an unclear path can bounce up.

This article is your step by step guide to build a GenAI solution that is efficient, ethical, and scales.

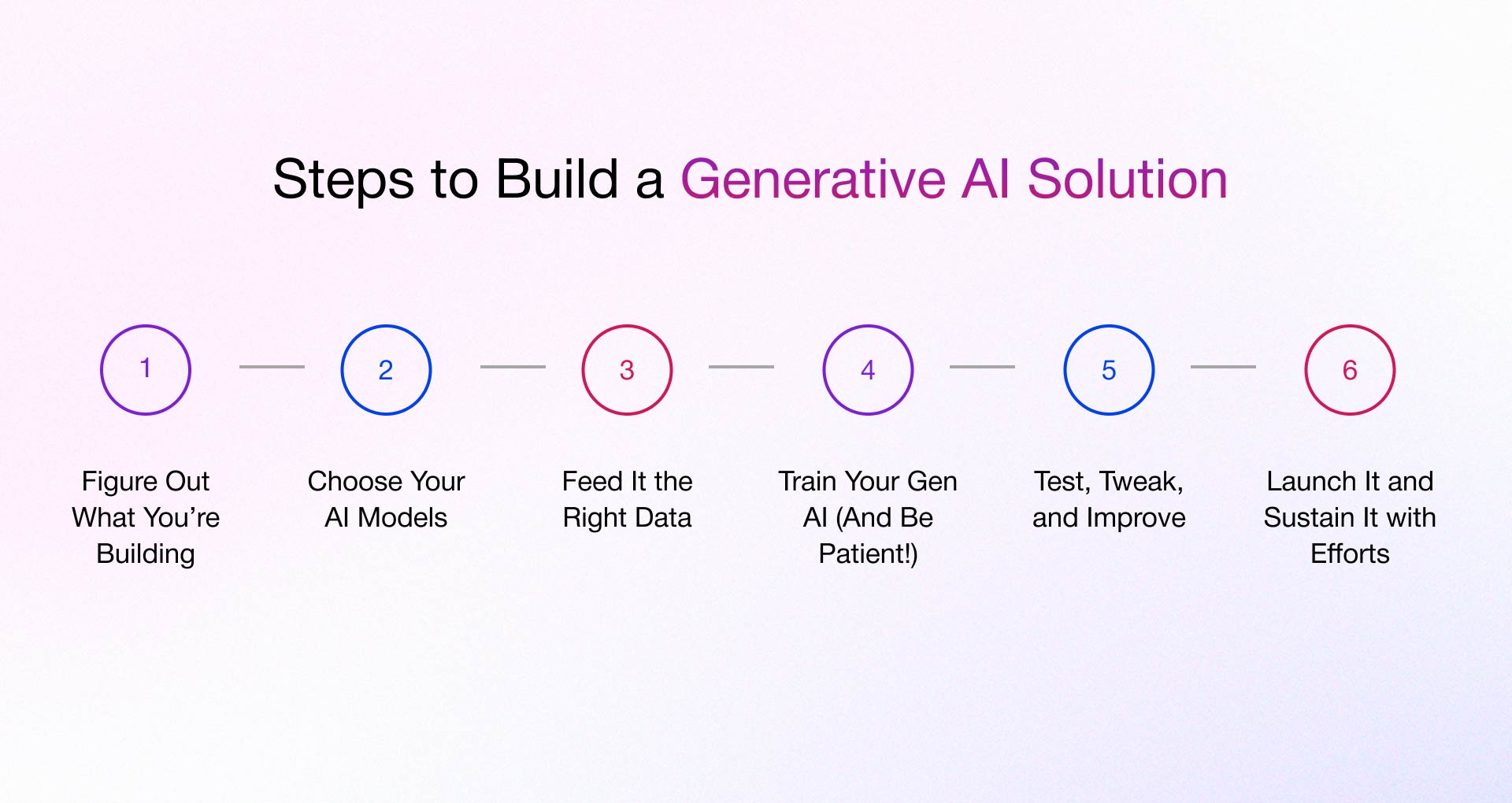

Steps for Building Your Generative AI Model

To build a generative AI solution, you need a clear goal, the right model, clean and relevant data, and robust training, testing, and deployment process.

Figure Out What You’re Building:

Building a generative AI solution comes with a flood of questions, and honestly, that is usually the hardest and might take more. So, don’t just think about what your generative AI model can do, but why it should exist in the first place.

Ask questions:

- What problem is it solving?

- Who is the end user?

- How will it help my target audience?

- What features are essential vs optional?

Suppose you’re developing Gen AI in healthcare. so, start by asking these questions:

- Can it accelerate drug discovery?

- Can it personalize my patients’ treatment plans?

- Can synthetic medical images improve AI-powered diagnostics?

- What administrative tasks it can automate?

- Is it cost-effective at scale?

Pro tip: Consult technology experts early. They’ll help validate feasibility, avoid poor architectural choices, and reduce downstream work.

Choose the Right AI Model:

Now that you know what you’re building, decide how to build a generative AI model based on your use case and data availability. Here are the key types:

- Large Language Models (LLMs) – Do you want the model to understand and generate human-like text (e.g., ChatGPT, Google Gemini)? They are best for chatbots, content creation, and even coding assistance.

- Diffusion Models –Used for creating stunning, high-quality images from noise patterns. Examples include DALL·E or Stable Diffusion. These models are ideal for marketing, product design or visual content.

- Generative Adversarial Networks (GANs) – Think deepfakes, AI-generated fashion designs, or realistic virtual characters. GANs pit two AI networks against each other—one generates, the other critiques—leading to highly realistic outputs.

- Variational Autoencoders (VAEs) – Need AI to generate slight variations of an existing image or sound? VAEs are great for creating diverse but realistic samples, often used in medical imaging and speech synthesis.

- Autoregressive Models – These are the storytellers of AI. They generate sequences (like text, music, or audio) by predicting one piece at a time. The GPT series and PixelRNN fall into this category.

- Transformers – This is the tech behind LLMs! These models efficiently process massive amounts of data and generate coherent responses. They power AI-driven search engines, translations, and writing assistants.

- Flow-Based Models – If you need AI to generate crisp, high-quality images and videos, this is your go-to. These models work by learning data distributions, making them useful in creative and entertainment industries.

- Neural Radiance Fields (NeRFs) – Imagine turning a handful of 2D images into a full 3D scene—that’s what NeRFs do. Perfect for gaming, virtual reality, and even real estate walkthroughs.

Feed It the Right Data:

Before you start using Gen AI for business, remember that your model won’t work without data. Data makes the whole platform or generative model run well. So, focus on:

- Right Data Sources – Pull data from trustworthy, verified inputs like user interactions, knowledge bases, industry reports, or live data feeds.

- Quality & Relevance – Focus on clean, varied, and useful. If you keep maintaining datasets that are messy or biased, your AI will copy those problems. Bad input leads to bad output!

- Accurate Labeling – Use automatic tagging, crowd help, or AI-assisted labeling/annotation tools to speed things up.

- Cleaning Data – To make your gen AI model smarter, tidy up your data before you feed it in. Use methods like tokenization, normalization, or data augmentation to clean it.

- Balanced Splits – Separate your dataset into three parts: training, validation, and testing sets to ensure your AI learns well-rounded skills.

- Storage Planning – Ensure you have storage solutions that can grow with your AI, whether you choose cloud-based options or a data warehouse. This way, your AI will always have the right information at hand.

Avoid:

- Unverified web scrapes

- Redundant or biased records

- Feeding raw, unstructured data without preprocessing

Train Your Gen AI (And Be Patient!):

Training your generative AI model is like teaching a kid a new skill. It takes time, patience, and a lot of repetition and struggles as well. And let’s be honest, there are a few tantrums along the way.

- This isn’t a microwave situation. It might take hours, days, or even weeks depending on the complexity of your model and the sheer volume of data. And those estimates? Yes, they can easily double when you hit a snag.

- You’ll need a good computer, maybe even a powerful GPU, to handle the heavy lifting. Or consider cloud computing – but even then, you’ll be wrestling with configuration settings, API keys, and those lovely ‘out-of-memory’ errors.

Expect these technical aspects:

-

- Gradient Descent Issues: Your model’s learning might get stuck in a rut or diverge completely. You’ll be tweaking learning rates and optimizers like a mad scientist.

- Overfitting/Underfitting: Your AI might memorize the training data perfectly but fail miserably on new inputs (overfitting), or it might not learn anything at all (underfitting). Prepare for lots of data augmentation and model parameter adjustments.

- Data Pipeline Bottlenecks: Getting the data from storage to the GPU efficiently? That’s a whole other challenge. You might encounter I/O errors, data format incompatibility, and slow transfer speeds.

- Dependency Hell: Managing the myriads of Python libraries and frameworks? Version conflicts and dependency errors are practically guaranteed.

- Resource Management: Keep track of GPU memory, CPU usage, and network bandwidth. It’s a delicate balancing act, and one misstep can bring your training to a screeching halt.

- Model Checkpointing and Recovery: Check for power outages, system crashes, and unexpected shutdowns. You’ll need robust checkpointing to save your progress and avoid starting from scratch.

Pro tip: Use cloud-based training environments (AWS, Azure, GCP) to scale resources without investing in dedicated hardware.

Test, Tweak, and Improve:

Now, you might notice that the output can be a bit hit or miss, or that the model struggles with more complex prompts. That is where you need to finetune your performance.

Take hyperparameters for example. Adjusting things like the number of layers, hidden units, or dropout rate can really be effective in how well your model performs and acts in the long run. Get those settings wrong, and you could end up with overfitting (where the model just memorizes the data instead of actually learning) or underfitting (where it misses out on capturing important patterns).

This is where thorough testing comes into the frame and plays a major role in enhancing the performance of your generative AI model. While standard metrics like BLEU[1] and ROUGE[2] are useful, true validation involves putting your model through its paces with unusual inputs, keeping an eye on bias, and fine-tuning your prompts. Keep adjusting, testing, and enhancing, because the more refined your model is, the better the results will be!

Pro tip: Think beyond technical accuracy—optimize for reliability, usefulness, and user trust.

Launch It and Sustain It with Efforts:

Your model is all set! Inference is running smoothly, latency is minimal, and the infrastructure can grow with your needs. But remember, launch day is just the start of the journey.

Real users bring real challenges. That’s why monitoring, logging, and anomaly detection are crucial for spotting issues early on. Plus, automated retraining and feedback loops ensure your model stays on its game. Regular version updates and API tweaks help maintain top-notch performance.

As time goes on, these updates will feel like second nature. Your AI will keep learning, adapting, and making a genuine impact just like your team does.

Reminder: GenAI models require care and governance—don’t treat them like static software products.

Key Challenges in Building a Generative AI Solution and How to Overcome Them

Building a generative AI solution involves multiple challenges—including data quality, model complexity, evaluation uncertainty, and ethical concerns—that require thoughtful handling at every stage.

Let’s look at the most common roadblocks and how to solve them effectively.

1. Data Quality is Everything (And Often a Mess!)

You have probably heard the phrase, “garbage in, garbage out.” It’s especially true in building custom generative AI solutions. These models only perform as well as the data they’re trained on. If your dataset is inconsistent, biased, or poorly labeled, your output will reflect that, sometimes in surprising and not-so-good ways.

What to do

- Curate a diverse and well-balanced dataset.

- Keep an eye out for hidden biases and skewed representation.

- Use data augmentation or synthetic data to fill in the gaps where needed.

Pro tip: Don’t rely on large volumes alone—go for relevance and consistency.

2. The Model Is Big… Really Big

Generative AI models, especially LLMs, can have billions of parameters and aspects. From long inference times to huge memory demands, things can quickly get out of hand especially when you’re building for real-time applications or solution.

To keep things in control:

- Try model optimization techniques like distillation or quantization.

- Use cloud-native infrastructure that can scale when you need it to.

- Distribute workloads smartly to keep performance smooth.

Fact: Smaller, optimized models can often match large ones in performance, if tailored well.

3. Evaluating Outputs Isn’t Straightforward

How do you measure creativity, tone, or usefulness? Traditional metrics like BLEU or ROUGE work for grammar and structure, but fail to assess meaning, tone, and factuality.

Better alternatives:

- Combine automated metrics with human evaluations.

- Run A/B tests to see what resonates with real users.

- Focus on task-specific goals rather than just numbers.

Pro tip: Aim for quality of outcome—not just statistical accuracy.

4. Edge Cases Can Break Your Model

Throw a generative model an oddball prompt like technical jargon or niche references and you might get gibberish back. These models often stumble with out-of-distribution or rarely seen inputs.

To improve adaptability:

- Use few-shot or zero-shot learning to increase flexibility.

- Fine-tune models with updated, domain-specific data.

- Integrate retrieval-augmented generation (RAG) for extra context.

Pro tip: Always test your model with real-world edge cases, not just curated test sets.

5. Bias and Harmful Outputs Are Easy to Miss

Even well-trained models can produce content that is biased, insensitive, or just plain wrong. It might not be intentional, but the impact is real on brand trust, compliance, and user safety.

To keep things in check

- Add bias detection and filtering mechanisms.

- Review outputs regularly to catch unwanted patterns.

- Build ethical review into your development process—not just at the end.

6. Privacy and Security Risks Are Real

Generative AI can unintentionally memorize personal and sensitive data from emails, internal chats, and user data regurgitate it in outputs.

So, while building GenAI solution, make sure to:

- Apply privacy-preserving techniques like differential privacy.

- Be cautious with user-generated or unfiltered training data.

- Conduct regular audits to catch leaks early.

Smarter Alternatives If You’re Not Ready to Build GenAI Solution from Scratch

Building a Generative AI model from scratch isn’t always needed or doable. If you’re limited by time, resources, or technical expertise, there are flexible alternatives to use generative AI and implement it in your path without getting into complex model creation. Here are four options to consider:

1. Try Pre-Trained Generative Models

You don’t have to start from zero. Big AI companies like OpenAI, Google, and Meta have already developed powerful generative models. You can adjust these models to suit your needs without spending months on training.

When to choose:

- You need fast results

- You have limited data for training

- You want to avoid managing infrastructure

2. Use AI APIs & Services

For many use cases, connecting to an API is the fastest route to production. Companies like OpenAI Cohere, and Hugging Face provide ready-to-use APIs for text, image, and even code generation.

When to choose this:

- You want minimal setup and fast deployment

- You’re embedding GenAI features into an existing app

- You need pay-as-you-go flexibility

3. Team Up with GenAI Experts like Rishabh Software

If you’ve got a plan but lack AI know-how, consider partnering with AI specialists. Rishabh Software, for example, specializes in building, fine-tuning, and integrating generative AI solutions across industries.

When to choose this:

- You need a custom solution, not just a plug-in

- You want expert guidance across strategy, development, and deployment

- You’re focused on long-term scalability and reliability

4. Adopt Open-Source Models

The open-source ecosystem is thriving. Platforms like Hugging Face, GitHub, and LangChain offer access to pre-defined models and development frameworks.

When to choose this:

- You want full control and customization

- You have in-house teams who can handle integration

- You’re building in regulated or proprietary environments

But, open-source models often require deeper engineering effort to integrate, scale, and secure properly. Rishabh Software can help you seamlessly integrate and optimize these models, ensuring everything runs smoothly and scales as needed all without the hefty price tag of proprietary AI.

How Rishabh Software Can Be Your Generative AI Development Partner

Every generative AI solution is a mix of trial and error, along with some exciting breakthroughs. But with the right technology partner, the entire process becomes quicker, smarter, and way more effective.

At Rishabh Software, we specialize in developing GenAI solutions that are not only technically sound but also business-aligned.

Whether you’re:

- Looking to develop a custom generative model from the ground up

- Fine-tuning pre-trained LLMs for a niche use case

- Integrating AI into your existing software ecosystem

- Or leveraging open-source frameworks with added flexibility

—we bring the expertise, tools, and delivery capability to make it happen

Frequently Asked Questions

Q: How long does it take to develop a Generative AI solution or model?

A: The timeline can really vary depending on how complex the project is, the availability of data, and how much customization is needed. Generally, you might be looking at a few weeks for fine-tuning, but if you’re building a model from scratch, it could take several months.

Q: Why should you invest in Generative AI solution development?

A: Well, Generative AI can significantly boost automation, personalization, and content creation, which in turn drives efficiency and sparks innovation. It helps businesses gain a competitive advantage by facilitating smarter decision-making and enhancing user experiences.

Footnotes:

30 Min

30 Min